Business Students Have Different Views about Their Performance and Approach to Study in Relation to Exam Formats, Depending on Attitudes Towards Digital Teaching

Abstract

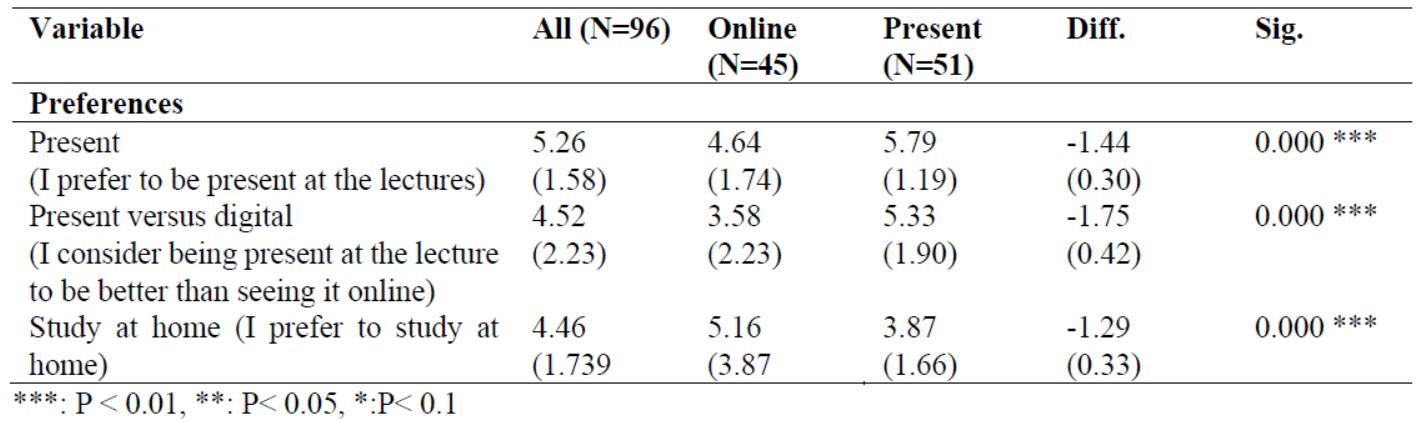

The aim of this article is to see if there are substantial differences in attitudes towards teaching methods and choice of assessment between the students who are present at campus and to those who prefer the online version. During COVID-19, we got a unique opportunity to compare the students who took the same course and had identical exams. The same questionnaire is distributed to both groups in a compulsory subject in economics at Norwegian University of Science and Technology (NTNU). The choice of method is a pairwise comparison of the mean values using T-test. The findings show a significant difference between these two groups. Those who chose to be on campus had higher Grade Point Average (GPA) at high school, and they preferred the traditional form of exams. Those who chose to follow the lectures digitally favoured multiple choice tests, or home-based ‘open book’ exams. This means that both teaching methods and the choice of exam forms can have a major impact on the ranking of the students.

References

Baniasadi, A., Salehi, K., Khodaie, E., Bagheri Noaparast, K., & Izanloo, B. (2022). Fairness in Classroom Assessment: A Systematic Review. The Asia-Pacific Education Researcher, 1-19. https://doi.org/10.1007/s40299-021-00636-z

Barrance, R. (2019). The fairness of internal assessment in the GCSE: the value of students’ accounts. Assessment in Education: Principles, Policy & Practice, 26(5), 563-583. https://doi.org/10.1080/0969594X.2019.1619514

Bengtsson, L. (2019). Take-Home Exams in Higher Education: A Systematic Review. Education Sciences 9(4), p267. https://doi.org/10.3390/educsci9040267.

Bettinger, E. P., Fox, L., Loeb, S., & Taylor, E. S. (2017). Virtual classrooms: How online college courses affect student success. American Economic Review, 107(9), 2855-2875. https://doi.org/10.1257/aer.20151193

Biwer, F., Wiradhany, W., Oude Egbrink, M., Hospers, H., Wasenitz, S., Jansen, W., & De Bruin, A. (2021). Changes and adaptations: How university students self-regulate their online learning during the COVID-19 pandemic. Frontiers In Psychology, 12, 642593.

Bonesrønning, H., & Opstad, L. (2015). Can student effort be manipulated? Does it matter? Applied Economics, 47(15), 1511-1524.

Buckley, A., Brown, D. M., Potapova-Crighton, O. A., & Yusuf, A. (2021). Sticking plaster or long-term option? Take-home exams at Heriot-Watt University. In Assessment and Feedback in a Post-Pandemic Era: A time for learning and inclusion (pp. 127-137). Advance HE. Retrieved from https://www.advance-he.ac.uk/knowledge-hub/ assessment-and-feedback-post-pandemic-era-time-learning-and-inclusion

Camilli, G. (2013). Ongoing issues in test fairness. Educational Research and Evaluation, 19(2-3), 104-120

Chan, N., & Kennedy, P. E. (2002). Are multiple‐choice exams easier for economics students? A comparison of multiple‐choice and “equivalent” constructed‐response exam questions. Southern Economic Journal, 68(4), 957-971.

Collazos, C. A., Fardoun, H., AlSekait, D., Pereira, C. S., & Moreira, F. (2021). Designing online platforms supporting emotions and awareness. Electronics, 10(3), 251. https://doi.org/10.3390/electronics10030251

Crews, J., & Parker, J. (2017). The Cambodian experience: Exploring university students' perspectives for online learning. Issues in Educational Research, 27(4), 697-719.

Engelhardt, B., Johnson, M., & Meder, M. E. (2021). Learning in the time of Covid-19: Some preliminary findings. International Review of Economics Education, 37, 100215. https://doi.org/10.1016/j.iree.2021.100215

Fallan, L., & Opstad, L. (2012). Attitudes towards Study Effort Response to Higher Grading Standards: Do Gender and Personality Distinctions Matter? Journal of Education and Learning, 1(2), 179-187. https://doi.org/10.5539/jel.v1n2p179

Guangul, F. M., Suhail, A. H., Khalit, M. I., & Khidhir, B. A. (2020). Challenges of remote assessment in higher education in the context of COVID-19: a case study of Middle East College. Educational Assessment, Evaluation and Accountability, 32(4), 519-535. https://doi.org/10.1007/s11092-020-09340-w

Hansen, P., Struth, L., Thon, M., & Umbach, T. (2021). The impact of the COVID-19 pandemic on teaching outcomes in higher education. Available at SSRN 3916349. https://doi.org/10.2139/ssrn.3916349

Haynie III, W. J. (2003). Effects of take-home tests and study questions on retention learning in technology education. Journal of Technology Education, 14(2).

Iannone, P., & Simpson, A. (2015). Students' preferences in undergraduate mathematics assessment. Studies in Higher Education, 40(6), 1046-1067. https://doi.org/10.1080/03075079.2013.858683

Iglesias-Pradas, S., Hernández-García, Á., Chaparro-Peláez, J., & Prieto, J. L. (2021). Emergency remote teaching and students’ academic performance in higher education during the COVID-19 pandemic: A case study. Computers in Human Behavior, 119, 106713.

Jones, E., Priestley, M., Brewster, L., Wilbraham, S. J., Hughes, G., & Spanner, L. (2021). Student wellbeing and assessment in higher education: The balancing act. Assessment & Evaluation in Higher Education, 46(3), 438-450. https://doi.org/10.1080/02602938.2020.1782344

Kim, C., Park, S. W., Cozart, J., & Lee, H. (2015). From motivation to engagement: The role of effort regulation of virtual high school students in mathematics courses. Journal of Educational Technology & Society, 18(4), 261-272.

Krathwohl, D. R. (2002). A revision of Bloom's taxonomy: An overview. Theory into practice, 41(4), 212-218. https://doi.org/10.1207/s15430421tip4104_2

Kuechler, W. L., & Simkin, M. G. (2010). Why is performance on multiple‐choice tests and constructed‐response tests not more closely related? Theory and an empirical test. Decision Sciences Journal of Innovative Education, 8(1), 55-73. https://doi.org/10.1111/j.1540-4609.2009.00243.x

Mamentu, M. D. (2021). Online Class Resitation Learning Model During the Covid-19 Pandemic to Increase Outcomes Students Learning in Class Accounting Lessons. International Educational Research, 4(2), p40. https://doi.org/10.30560/ier.v4n2p40

Misca, G., & Thornton, G. (2021). Navigating the same storm but not in the same boat: Mental Health vulnerability and coping in women university students during the first COVID-19 lockdown in the UK. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.648533

Nazempour, R., Darabi, H. d, & Nelson, P. C. (2022). Impacts on Students’ Academic Performance Due to Emergency Transition to Remote Teaching during the COVID-19 Pandemic: A Financial Engineering Course Case Study. Education Sciences, 12(3), 202. https://doi.org/10.3390/educsci12030202

Opstad, L. (2019). Different attitudes towards mathematics among economic and business students and choice of business course major in Norway. Social Sciences and Education Research Review, 6(2), 6-30.

Opstad, L. (2020). Attitudes towards statistics among business students: do gender, mathematical skills and personal traits matter? Sustainability, 12(15). https://doi.org/10.3390/su12156104

Opstad, L. (2021a). Is there any Link between Gender, Personality Traits and Business Students’ Study Time and Attendance? International conference, The Future of Education Conference Proceedings, Pixel. 2021, 1-2 July, 2021, Bologna, Italy

Opstad, L. (2021b). Can Multiple-Choice Questions Replace Constructed Response Test as an Exam Form in Business Courses? Evidence from a Business School. Athens Journal of Education, 8(4), 349-360.

Opstad, L. (2022). Did COVID-19 change students’ grade assessments? A study from a business school. Social Sciences and Education Research Review, 9(1), 7-16. https://doi.org/10.5281/zenodo.6794376

Opstad, L., & Pettersen, I. (2022). The Impact of Take-home Open-book Examinations due to COVID-19 among Business Students. Do Gender, Age, and Academic Skills Matter? Interdisciplinary Journal of Education Research, 4, 28-43. https://doi.org/10.51986/ijer-2022.vol4.03

Pilotti, M. A., El-Moussa, O. J., & Abdelsalam, H. M. (2022). Measuring the impact of the pandemic on female and male students’ learning in a society in transition: A must for sustainable education. Sustainability, 14(6), 3148. https://doi.org/10.3390/su14063148

Refaat El Said, G. (2020). How did the COVID-19 pandemic affect higher educational learning experience? An Empirical investigation of learners’ academic performance at a university in a developing country. Advances in Human-Computer Interaction. 202, Article ID 6649524, 10 pages https://doi.org/10.1155/2021/6649524

Rich Jr, J. D., Colon, A. N., Mines, D., & Jivers, K. L. (2014). Creating learner-centered assessment strategies for promoting greater student retention and class participation. Frontiers in Psychology, 5, 595. https://doi.org/10.3389/fpsyg.2014.00595

Rodrıguez-Planas, N. (2021), COVID-19 and College Academic Performance: A Longitudinal Analysis, IZA Discussion Paper 14113, Institute of Labor Economics (IZA), Bonn.

Sahu, P. K. (2020). Closure of Universities Due to Coronavirus Disease 2019 (COVID-19): Impact Closure of Universities Due to Coronavirus Disease 2019 (COVID-19): Impact on Education and Mental Health of Students and Academic Staff. https://doi.org/10.7759/cureus.7541.

Slack, H. R., & Priestley, M. (2022). Online learning and assessment during the Covid-19 pandemic: exploring the impact on undergraduate student well-being. Assessment & Evaluation in Higher Education, 1-17. https://doi.org/10.1080/02602938.2022.2076804

Spiegel, T., & Nivette, A. (2021). The relative impact of in-class closed-book versus take-home open-book examination type on academic performance, student knowledge retention and wellbeing. Assessment & Evaluation in Higher Education, 1-14. https://doi.org/10.1080/02602938.2021.2016607.

Stinebrickner, R., & Stinebrickner, T.R. (2008). The causal effect of studying on academic performance. The BE Journal of Economic Analysis & Policy, 8(1), 1-53.

Tam, A. C. F. (2022). Students’ perceptions of and learning practices in online timed take-home examinations during Covid-19. Assessment & Evaluation in Higher Education, 47(3), 477-492. https://doi.org/10.1080/026029 38.2021.1928599

Tsaparlis, G., & Zoller, U. (2003). Evaluation of higher vs. lower-order cognitive skills-type examinations in chemistry: Implications for university in-class assessment and examinations. University Chemistry Education, 7(2), 50-57.

Unger, S., & Meiran, W. R. (2020). Student attitudes towards online education during the COVID-19 viral outbreak of 2020: Distance learning in a time of social distance. International Journal of Technology in Education and Science, 4(4), 256-266.

Walstad, W. B., & Miller, L. A. (2016). What's in a grade? Grading policies and practices in principles of economics. The Journal of Economic Education, 47(4), 338-350. https://doi.org/10.1080/00220485.2016.1213683

Walstad, W., & Bosshardt, W. (2019). Grades in economics and other undergraduate courses. In AEA Papers and Proceedings 109, 266-70.

Williams, C., Dziurawiec, S., & Heritage, B. (2018). More Pain than Gain: Effort–Reward Imbalance, Burnout, and Withdrawal Intentions Within a University Student Population. Journal of Educational Psychology, 110(3), 378-394. https://doi.org/10.1037/edu0000212

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)