Optimizing Logcumsumexp on Cambricon MLU: Architecture-Aware Scheduling and Memory Management

Abstract

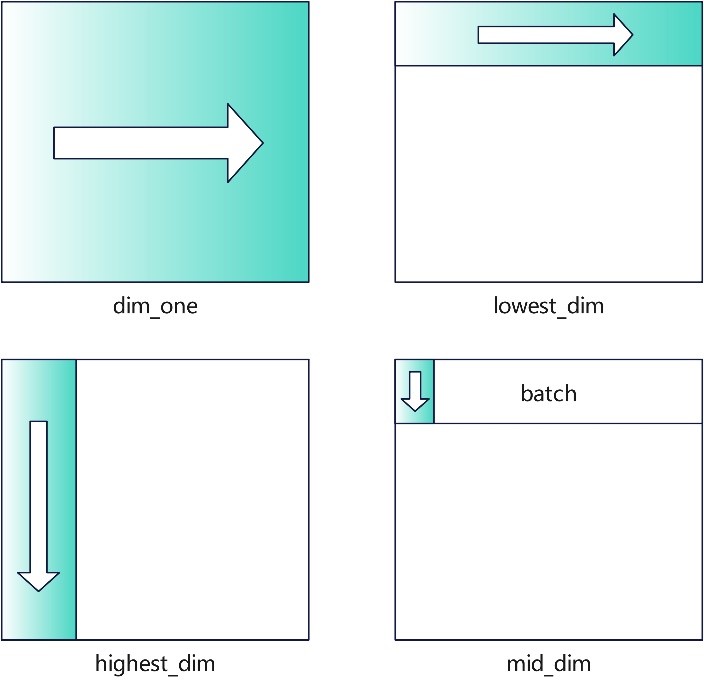

The Logcumsumexp algorithm is a core method for numerically stable cumulative summation in logarithmic space, especially suitable for scenarios involving extremely small or large numerical computations. By applying logarithmic transformation, this algorithm effectively addresses the common issues of underflow and overflow in probability calculations, deep learning, and statistical modeling, making it an important high-performance computing algorithm. In recent years, China's chip industry has been continuously rising, and the domestic MLU computing platform from Cambricon Technologies has provided new options for global users. Based on the Cambricon MLU computing platform and in combination with its hardware structure, this paper constructs a set of Logcumsumexp algorithm named MLULCSE, which can perform Logcumsumexp operations on tensors of any dimension along the specified dimension and has been optimized for different types of Logcumsumexp tasks. By categorizing tasks into four types and implementing different strategies tailored to the hardware architecture, we achieved efficient logcumsumexp computation. This work enables efficient probabilistic computing on domestic AI accelerators, experimental results show that MLULCSE running on MLU 370-X4 has a hardware time that is controlled within 7 times compared to Pytorch Logcumsumexp running on Tesla V100, and in some cases, it even reaches 0.42 times.

References

[2] Heinsen, F. A. (2023). Efficient parallelization of an ubiquitous sequential computation. CoRR, abs/2311.06281.

[3] Yang, X., Abraham, L., Kim, S., Smirnov, P., Ruan, F., Haibe-Kains, B., & Tibshirani, R. (2022, August). FastCPH: Efficient survival analysis for neural networks.

[4] Cui, C., Yan, Z., Muhawenayo, G., & Kerner, H. (2025). Linear-time sequence modeling with MLPs. Submitted to the International Conference on Learning Representations (ICLR).

[5] Bassam, E., Zhu, D., & Bian, K. (2025). PLD: A choice-theoretic list-wise knowledge distillation. arXiv preprint arXiv:2506.12542.

[6] Lin, J., Wang, W., Yin, L., & Han, Y. (2025). KAITIAN: A unified communication framework for enabling efficient collaboration across heterogeneous accelerators in embodied AI systems. arXiv preprint arXiv:2505.10183.

[7] Ma, Z., Wang, H., Xing, J., Zheng, L., Zhang, C., Cao, H., Huang, K., Tang, S., Wang, P., & Zhai, J. (2023). PowerFusion: A tensor compiler with explicit data movement description and instruction-level graph IR. arXiv preprint arXiv:2307.04995.

[8] Dong, S., Wen, Y., Bi, J., Huang, D., Guo, J., Xu, J., Xu, R., Song, X., Hao, Y., Zhou, X., Chen, T., Guo, Q., & Chen, Y. (2025). QiMeng-Xpiler: Transcompiling tensor programs for deep learning systems with a neural-symbolic approach. arXiv preprint arXiv:2505.02146.

[9] Calafiore, G. C., Gaubert, S., & Possieri, C. (2020). Log-sum-exp neural networks and posynomial models for convex and log-log-convex data. IEEE Transactions on Neural Networks and Learning Systems, 31(3), 827–838. https://doi.org/10.1109/TNNLS.2019.2910417

[10] Miyagawa, T., & Ebihara, A. F. (2021). The power of log-sum-exp: Sequential density ratio matrix estimation for speed-accuracy optimization. In Proceedings of the 38th International Conference on Machine Learning (ICML) (Vol. 139, pp. 7792–7804). PMLR.

[11] Chen, Y., & Gao, D. Y. (2016). Global solutions to nonconvex optimization of 4th-order polynomial and log-sum-exp functions. Journal of Global Optimization, 64, 417–431.

[12] Gu, A., & Dao, T. (2023). Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752. https://doi.org/10.1093/micmic/ozad084

[13] Blelloch, G. E. (1990, November). Prefix sums and their applications. Technical Report CMU-CS-90-1903, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA.

[14] Dao, T., & Gu, A. (2024). Transformers are SSMs: Generalized models and efficient algorithms through structured state space duality. arXiv preprint arXiv:2405.21060.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)