Generative Graph based Model Inversion Attack on Graph Neural Network

Abstract

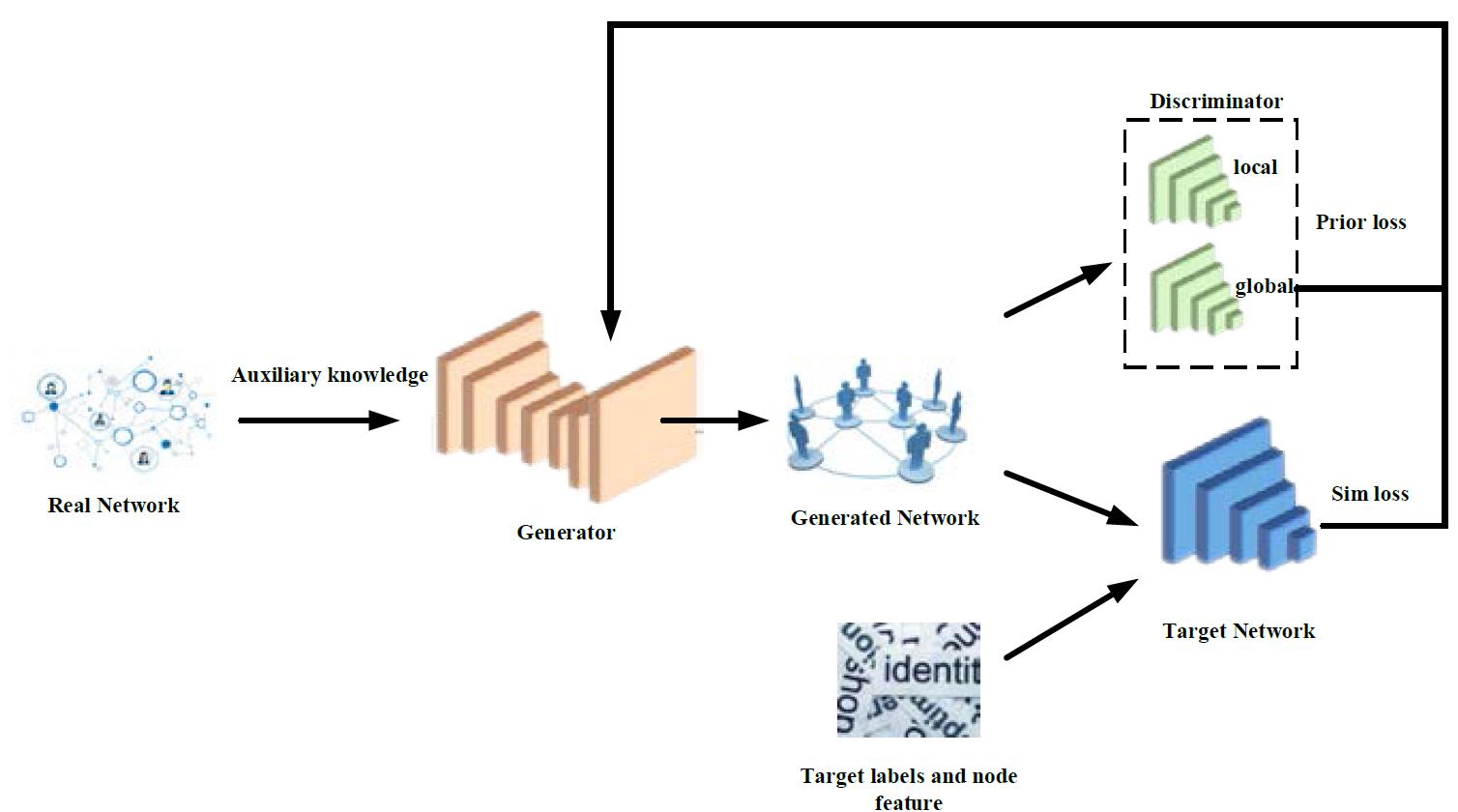

Aiming at the privacy leakage risks of Graph Neural Networks (GNNs) in black-box scenarios, this paper proposes a Generation-Graph based Model Inversion Attack on GNN (GenG-MIA). By constructing a generative attack framework and integrating public knowledge distillation with structural optimization strategies, the proposed method effectively addresses challenges such as the high-dimensional sparsity of graph structure data, generative bias, and model collapse. GenG-MIA operates in two stages: first, during the public knowledge distillation stage, Wasserstein GAN is employed to train generators and discriminators on public datasets, enhancing the authenticity and diversity of generated graphs through a diversity loss term and introducing local/global discriminators to mitigate semantic gaps; second, in the structure revelation stage, potential vector projections are optimized to align with the feature space of the target model, thus recovering missing sensitive structures in training graphs. Experimental results show that GenG-MIA significantly outperforms existing methods in terms of attack accuracy and efficiency, enabling the efficient reconstruction of the topological structures of target training graphs and providing a new paradigm for privacy risk assessment of GNN models. This study further expands the application potential of generative attacks in complex graph data scenarios and offers theoretical references for privacy protection and model robustness design.

References

Duddu, V., Boutet, A., & Shejwalkar, V. (2020). Quantifying privacy leakage in graph embedding. Proceedings of the 17th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services (MobiQuitous), 76–85. https://doi.org/10.1145/3448891.3448939

Fredrikson, M., Jha, S., & Ristenpart, T. (2015). Model inversion attacks that exploit confidence information and basic countermeasures. Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, 1322–1333. https://doi.org/10.1145/2810103.2813677

Fredrikson, M., Lantz, E., Jha, S., Lin, S., Page, D., & Ristenpart, T. (2014). Privacy in pharmacogenetics: An end-to-end case study of personalized warfarin dosing. Proceedings of the 23rd USENIX Security Symposium, 17–32. https://www.usenix.org/conference/usenixsecurity14/technical-sessions/presentation/fredrikson

Liu, X., Liu, K., Li, X., Su, J., Ge, Y., Wang, B., & Luo, J. (2020). An iterative multi-source mutual knowledge transfer framework for machine reading comprehension. Proceedings of the 29th International Joint Conference on Artificial Intelligence (IJCAI), 3794–3800. https://doi.org/10.24963/ijcai.2020/525

Wang, T., Zhang, Y., & Jia, R. (2021). Improving robustness to model inversion attacks via mutual information regularization. Proceedings of the 35th AAAI Conference on Artificial Intelligence, 35(13), 11666–11673. https://doi.org/10.1609/aaai.v35i13.17394

Yin, Y., Zhang, X., Zhang, H., Wang, Y., & Chen, X. (2023). Ginver: Generative model inversion attacks against collaborative inference. Proceedings of the 32nd USENIX Security Symposium, 2122–2131. https://doi.org/10.48550/arXiv.2302.12345

Zhang, Z., Chen, M., Backes, M., Shen, Y., & Zhang, Y. (2022). Inference attacks against graph neural networks. Proceedings of the 31st USENIX Security Symposium, 4543–4560. https://doi.org/10.48550/arXiv.2110.02631

Zhang, Z., Liu, Q., Huang, Z., Wang, H., Lee, C.-K., & Chen, E. (2022). Model inversion attacks against graph neural networks. Proceedings of the 31st International Joint Conference on Artificial Intelligence (IJCAI), 35(9), 8729–8741. https://doi.org/10.24963/ijcai.2022/121

Zhang, Z., Liu, Q., Huang, Z., Wang, H., Lu, C., Liu, C., & Chen, E. (2021). GraphMI: Extracting private graph data from graph neural networks. Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI), 3749–3755. https://doi.org/10.24963/ijcai.2021/516

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)