3D Object Detection via Residual SqueezeDet

Abstract

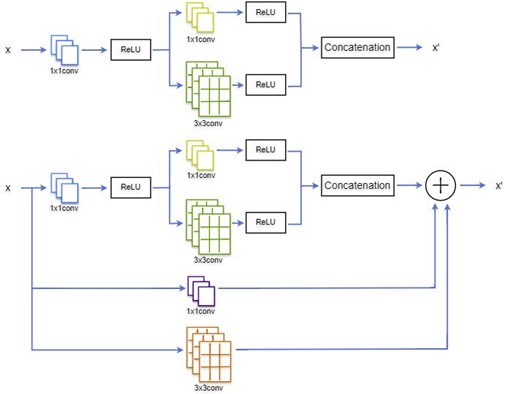

Three-dimensional object detection is a critical task in computer vision with applications in autonomous driving, robotics, and augmented reality. This paper introduces Residual SqueezeDet, a novel network architecture that enhances the performance of 3D object detection on the KITTI dataset. Building upon the efficient SqueezeDet framework, we propose the Residual Fire module, which incorporates skip connections inspired by ResNet architectures into the original Fire module. This innovation improves gradient flow, enhances feature propagation, and allows for more effective training of deeper networks. Our method leverages point cloud and image-based features, employing a Residual SqueezeDet to effectively capture local and global context. Extensive experiments on the KITTI dataset demonstrate that Residual SqueezeDet significantly outperforms the original SqueezeDet, with particularly notable improvements in challenging scenarios. The proposed model maintains computational efficiency while achieving state-of-the-art performance, making it well-suited for real-time applications in autonomous driving. Our work contributes to the field by providing a more accurate and robust solution for 3D object detection, paving the way for improved perception systems in dynamic environments.

References

[2] Qi, C. R., Su, H., Mo, K., & Guibas, L. J., (2017). PointNet: Deep learning on point sets for 3D classification and segmentation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, 652-660.

[3] Qi, C. R., Yi, L., Su, H., & Guibas, L. J. (2017). PointNet++: Deep hierarchical feature learning on point sets in a metric space. Advances in Neural Information Processing Systems (NIPS), 2017, 5099-5108.

[4] Zhou, Y., & Tuzel, O. (2018). VoxelNet: End-to-end learning for point cloud based 3D object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, 4490-4499. https://doi.org/10.1109/CVPR.2018.00472

[5] Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks," in Advances in Neural Information Processing Systems (NIPS), 2015, 91-99.

[6] Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C. Y., & Berg, A. C. (2016). Ssd: Single shot multibox detector. European Conference on Computer Vision (ECCV), 2016, 21-37. https://doi.org/10.1007/978-3-319-46448-0_2

[7] Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, 779-788. https://doi.org/10.1109/CVPR.2016.91

[8] Wu, B., Iandola, F., Jin, P. H., & Keutzer, K. (2017). Squeezedet: Unified, small, low power fully convolutional neural networks for real-time object detection for autonomous driving. in IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2017, 446-454. https://doi.org/10.1109/CVPRW.2017.60

[9] Iandola, F. N., Han, S., Moskewicz, M. W., Ashraf, K., Dally, W. J., & Keutzer, K. (2016). Squeezenet: Alexnet-level accuracy with 50x fewer parameters and <0.5 mb model size," arXiv preprint arXiv:1602.07360, 2016.

[10] Zhang, S., Wen, L., Bian, X., Lei, Z., & Li, S. Z. (2018). Single-shot refinement neural network for object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, 4203-4212. https://doi.org/10.1109/CVPR.2018.00442

[11] Qin, Z., Li, Z., Zhang, Z., Bao, Y., Yu, G., Peng, Y., & Sun, J. (2019). Thundernet: Towards real-time generic object detection on mobile devices. IEEE International Conference on Computer Vision (ICCV), 2019, 6718-6727. https://doi.org/10.1109/ICCV.2019.00682

[12] Lang, A. H., Vora, S., & Matusik, D. B. (2019). PointPillars: Fast Encoders for Object Detection from Point Clouds. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, 12697-12705. https://doi.org/10.1109/CVPR.2019.01298

[13] Shi, S., Wang, C., Li, X., Guo, H., Li, J., & Qi, X. (2020). PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020, 10529-10538. https://doi.org/10.1109/CVPR42600.2020.01054

[14] Wang, G., Wu, J., Tian, B., Teng, S., Chen, L., & Cao, D. (2022). CenterNet3D: An Anchor Free Object Detector for Point Cloud. IEEE Transactions on Intelligent Transportation Systems, 23(8), 12953-12965. https://doi.org/10.1109/TITS.2021.3118698

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)