Theoretical Analysis of Adam Optimizer in the Presence of Gradient Skewness

Abstract

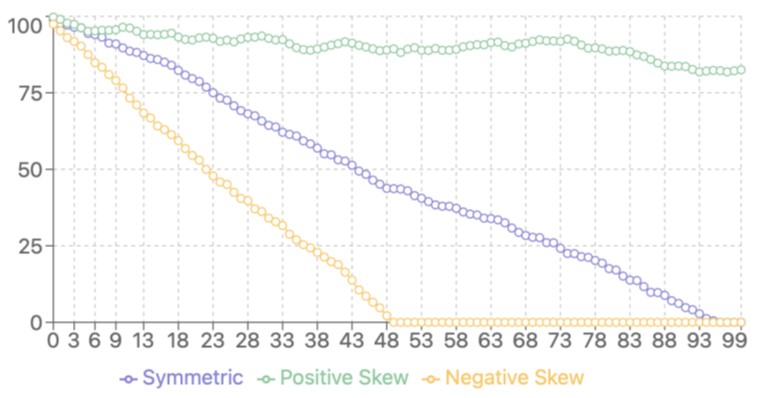

The Adam optimizer has become a cornerstone in deep learning, widely adopted for its adaptive learning rates and momentumbased updates. However, its behavior under non-standard conditions, particularly skewed gradient distributions, remains underexplored. This paper presents a novel theoretical analysis of the Adam optimizer in the presence of skewed gradients, a scenario frequently encountered in real-world applications due to imbalanced datasets or inherent problem characteristics. We extend the standard convergence analysis of Adam to explicitly account for gradient skewness, deriving new bounds that characterize the optimizer’s performance under these conditions. Our main contributions include: (1) a formal proof of Adam’s convergence under skewed gradient distributions, (2) quantitative error bounds that capture the impact of skewness on optimization outcomes, and (3) insights into how skewness affects Adam’s adaptive learning rate mechanism. We demonstrate that gradient skewness can lead to biased parameter updates and potentially slower convergence compared to scenarios with symmetric distributions. Additionally, we provide practical recommendations for mitigating these effects, including adaptive gradient clipping and distribution-aware hyperparameter tuning. Our findings bridge a critical gap between Adam’s empirical success and its theoretical underpinnings, offering valuable insights for practitioners dealing with non-standard optimization landscapes in deep learning.

References

[2] Ruder, S. (2016). An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747, 2016.

[3] Johnson, J. M., & Khoshgoftaar, T. M. (2019). Survey on deep learning with class imbalance. Journal of big data, 6(1), 1-54. https://doi.org/10.1186/s40537-019-0192-5

[4] Liu, Z., Miao, Z., Zhan, X., Wang, J., Gong, B., & Yu, S. X. (2019). Large-scale long-tailed recognition in an open world. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2537-2546. https://doi.org/10.1109/CVPR.2019.00264

[5] Simsekli, U., Sagun, L., & Gurbuzbalaban, M. (2019). A tail-index analysis of stochastic gradient noise in deep neural networks. International Conference on Machine Learning. PMLR, 2019, 5827-5837.

[6] Loshchilov, I., & Hutter, F. (2017). Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101.

[7] Bottou, L., Curtis, F. E., & Nocedal, J. (2018). Optimization methods for large-scale machine learning. SIAM Review, 60(2), 223-311. https://doi.org/10.1137/16M1080173

[8] Duchi, J., Hazan, E., & Singer, Y. (2011). Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research, 12(7).

[9] Tieleman, T. (2012). Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA: Neural Networks for Machine Learning, 4(2), 26.

[10] Reddi, S. J., Kale, S., & Kumar, S. (2019). On the convergence of adam and beyond. arXiv preprint arXiv:1904.09237.

[11] Zhuang, J., Tang, T., Ding, Y., Tatikonda, S. C., Dvornek, N., Papademetris, X., & Duncan, J. (2020). Adabelief optimizer: Adapting stepsizes by the belief in observed gradients. Advances in Neural Information Processing Systems, 33, pp. 18 795-18 806.

[12] Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., & Han, J. (2019). On the variance of the adaptive learning rate and beyond. arXiv preprint arXiv:1908.03265.

[13] Nesterov, Y. (2013). Introductory lectures on convex optimization: A basic course. Springer Science & Business Media, 87.

[14] Nesterov, Y. (1983). A method for solving the convex programming problem with convergence rate o (1/k2). Dokl Akad Nauk Sssr, 269, p. 543.

[15] Sun, R. (2019). Optimization for deep learning: theory and algorithms. arXiv preprint arXiv:1912.08957.

[16] Ghadimi, S., & Lan, G. (2013). Stochastic first-and zeroth-order methods for nonconvex stochastic programming. SIAM journal on optimization, 23(4), 2341-2368.https://doi.org/10.1137/120880811

[17] Chen, X., Liu, S., Sun, R., & Hong, M. (2018). On the convergence of a class of adam-type algorithms for non-convex optimization. arXiv preprint arXiv:1808.02941.

[18] Zhou, D., Chen, J., Cao, Y., Tang, Y., Yang, Z., & Gu, Q. (2018). On the convergence of adaptive gradient methods for nonconvex optimization. arXiv preprint arXiv:1808.05671.

[19] Wilson, A. C., Roelofs, R., Stern, M., Srebro, N., & Recht, B. (2017). The marginal value of adaptive gradient methods in machine learning. Advances in Neural Information Processing Systems, 30.

[20] Soudry, D., Hoffer, E., Nacson, M. S., Gunasekar, S., & Srebro, N. (2018). The implicit bias of gradient descent on separable data. Journal of Machine Learning Research, 19(70), 1-57.

[21] Torralba, A., & Efros, A. A. (2011). Unbiased look at dataset bias. CVPR 2011. IEEE, 1521-1528. https://doi.org/10.1109/CVPR.2011.5995347

[22] Zhang, G., Li, L., Nado, Z., Martens, J., Sachdeva, S., Dahl, G., Shallue, C., & Grosse, R. B. (2019). Which algorithmic choices matter at which batch sizes? insights from a noisy quadratic model. Advances In Neural Information Processing Systems, 32.

[23] Cont, R. (2001). Empirical properties of asset returns: stylized facts and statistical issues. Quantitative Finance, 1(2), 223-236. https://doi.org/10.1088/1469-7688/1/2/304

[24] Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., van der Laak, J. A., van Ginneken, B., & S´anchez, C. I. (2017). A survey on deep learning in medical image analysis. Medical image analysis, 42, 60-88. https://doi.org/10.1016/j.media.2017.07.005

[25] Pang, B., & Lee, L. (2008). Opinion mining and sentiment analysis. Now Publishers Inc, 2008. https://doi.org/10.1561/9781601981516

[26] Ying, R., He, R., Chen, K., Eksombatchai, P., Hamilton, W. L., & Leskovec, J. (2018). Graph convolutional neural networks for webscale recommender systems. Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 974-983. https://doi.org/10.1145/3219819.3219890

[27] Chandola, V., Banerjee, A., & Kumar, V. (2009). Anomaly detection: A survey. ACM computing surveys (CSUR), 41(3), 1-58. https://doi.org/10.1145/1541880.1541882

[28] Van Horn, G., Mac Aodha, O., Song, Y., Cui, Y., Sun, C., Shepard, A., Adam, H., Perona, P., & Belongie, S. (2018). The inaturalist species classification and detection dataset. Proceedings of the IEEE conference on computer vision and pattern recognition, 8769-8778. https://doi.org/10.1109/CVPR.2018.00914

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)