How Does the Screen Expansion in the Images through Generative AI Affect the User Experience of Watching the Video on XR Environments?

Abstract

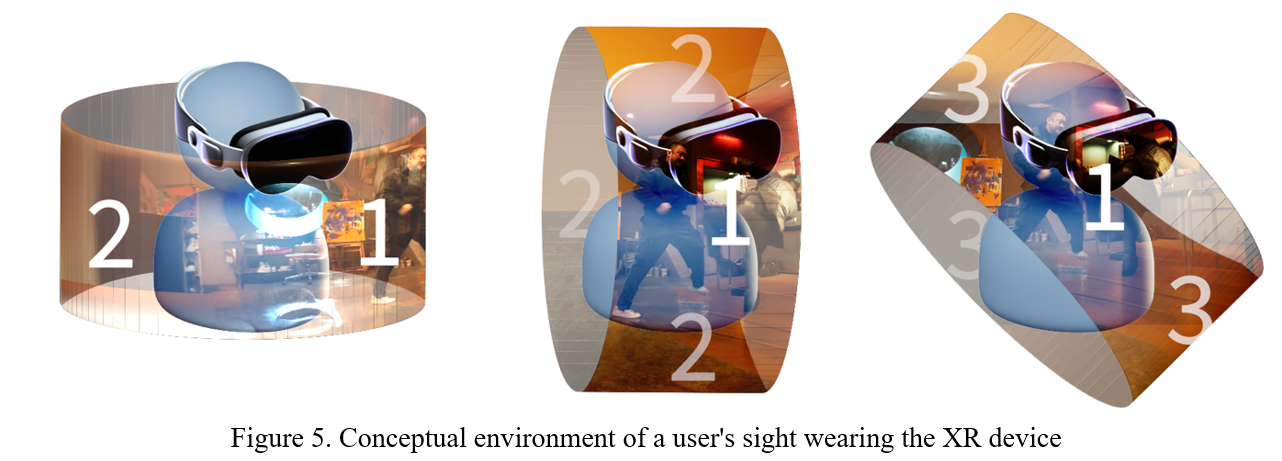

The advent of generative AI, which has recently emerged, signifies the ability to create images by expanding upon existing images and filling in empty areas. Consequently, this research examined the impact of providing expanded images on the existing movie screen on usability when watching a movie while wearing XR equipment. This is because when a video with a 16:9 or 21:9 aspect ratio is viewed while wearing an XR device, the video can be expanded to occupy the entire screen in extended reality. The experimental design entailed the real-time capture of the movie screen using Adobe's generative AI, followed by the expansion of the captured image and its manipulation in both the vertical and horizontal directions. The video comprises a 15-second movie, featuring a VR scenario. A usability evaluation (SUS) was conducted, utilizing this function to expand the screen area. The experimental results substantiated the positive impact of generating images in real-time through generative AI on an XR device that expands the screen area through generative AI.

References

Che, M. (2018). ScreenX : Spatial Interface for Immersive Cinema experience. The Korea HCI society conference: Gangwon, Korea, 847-850.

Jang, M., & Yun, J. (2021). The Effect of the Use of Humor in Artificial Intelligence on the Emotional Experience of Users. The Korea HCI society conference: Seoul, Korea, 266-271.

Kim, J., & Kim, D. (2012). A Study on the Screen Evolution and Expansion of the Concept. The Journal of Korean Institute of Next Generation Computing, 8(2), 87-98. UCI: G704-SER000010579.2012.8.2.007

Kim, J., Kong, S., Jang, M., & Kim. (2024). A Study on Production and Experience of Immersive Contents based on Mixed Reality and Virtual Reality using Meta Quest Pro. Journal of the Korea Computer Graphics Society, 30(3), 71-79. https://doi.org/10.15701/kcgs.2024.30.3.71

Kim, S. (2015). Study on the screen according to user-soluble expand in smart environments. (Master’s theory). Hanyang University, Seoul, Rep. Korea.

Lee, S., & Lee, K (2024). Arousal and response according to visual stimulating elements in VR contents -Utilizing brainwave analysis. Journal of Korea Institute of Spatial Design, 19(1), 475-486. https://doi.org/10.35216 /kisd.2023.19.1.475

Lee, S. (2019). Multiplication and Total Perceptual Experience - Multiscreens and Intermedia Environments in the Expanded Cinema of the 1960s. Film Studies, (79), 95-130. https://doi.org/10.17947/FS.2019.3.79.95

Son, E., & Kim, Y. (2023). Proposal of Integrated Detection Platform Based on Virtual/Mixed Reality Using Deep Learning Image Recognition Technology. Journal of the Korea Institute of Information and Communication Engineering, 27(8), 905-912. https://doi.org/10.6109/jkiice.2023.27.8.905

Woods, S., Walters, M., Koay, K. L., & Dautenhahn, K. (2006). Comparing human robot interaction scenarios using live and video based methods: Towards a novel methodological approach. In 9th IEEE International Workshop on Advanced Motion Control. Istanbul, Turkey, 750-755.

Zhang, Y., & No, S. (2023). A Study on the Interactive Method to Improve the Immersion of VR Animation -Focused on

This work is licensed under a Creative Commons Attribution 4.0 International License.

Copyright for this article is retained by the author(s), with first publication rights granted to the journal.

This is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

1.png)